Datasets:

The dataset viewer is not available for this split.

Error code: FeaturesError

Exception: ArrowInvalid

Message: JSON parse error: The document is empty.

Traceback: Traceback (most recent call last):

File "/usr/local/lib/python3.12/site-packages/datasets/packaged_modules/json/json.py", line 174, in _generate_tables

df = pandas_read_json(f)

^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/packaged_modules/json/json.py", line 38, in pandas_read_json

return pd.read_json(path_or_buf, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pandas/io/json/_json.py", line 791, in read_json

json_reader = JsonReader(

^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pandas/io/json/_json.py", line 905, in __init__

self.data = self._preprocess_data(data)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pandas/io/json/_json.py", line 917, in _preprocess_data

data = data.read()

^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/utils/file_utils.py", line 813, in read_with_retries

out = read(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "<frozen codecs>", line 322, in decode

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xe5 in position 35: invalid continuation byte

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/split/first_rows.py", line 228, in compute_first_rows_from_streaming_response

iterable_dataset = iterable_dataset._resolve_features()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 3496, in _resolve_features

features = _infer_features_from_batch(self.with_format(None)._head())

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 2257, in _head

return next(iter(self.iter(batch_size=n)))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 2461, in iter

for key, example in iterator:

^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 1952, in __iter__

for key, pa_table in self._iter_arrow():

^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 1974, in _iter_arrow

yield from self.ex_iterable._iter_arrow()

File "/usr/local/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 503, in _iter_arrow

for key, pa_table in iterator:

^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 350, in _iter_arrow

for key, pa_table in self.generate_tables_fn(**gen_kwags):

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/datasets/packaged_modules/json/json.py", line 177, in _generate_tables

raise e

File "/usr/local/lib/python3.12/site-packages/datasets/packaged_modules/json/json.py", line 151, in _generate_tables

pa_table = paj.read_json(

^^^^^^^^^^^^^^

File "pyarrow/_json.pyx", line 342, in pyarrow._json.read_json

File "pyarrow/error.pxi", line 155, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 92, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: JSON parse error: The document is empty.Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

XModBench: Benchmarking Cross-Modal Capabilities and Consistency in Omni-Language Models

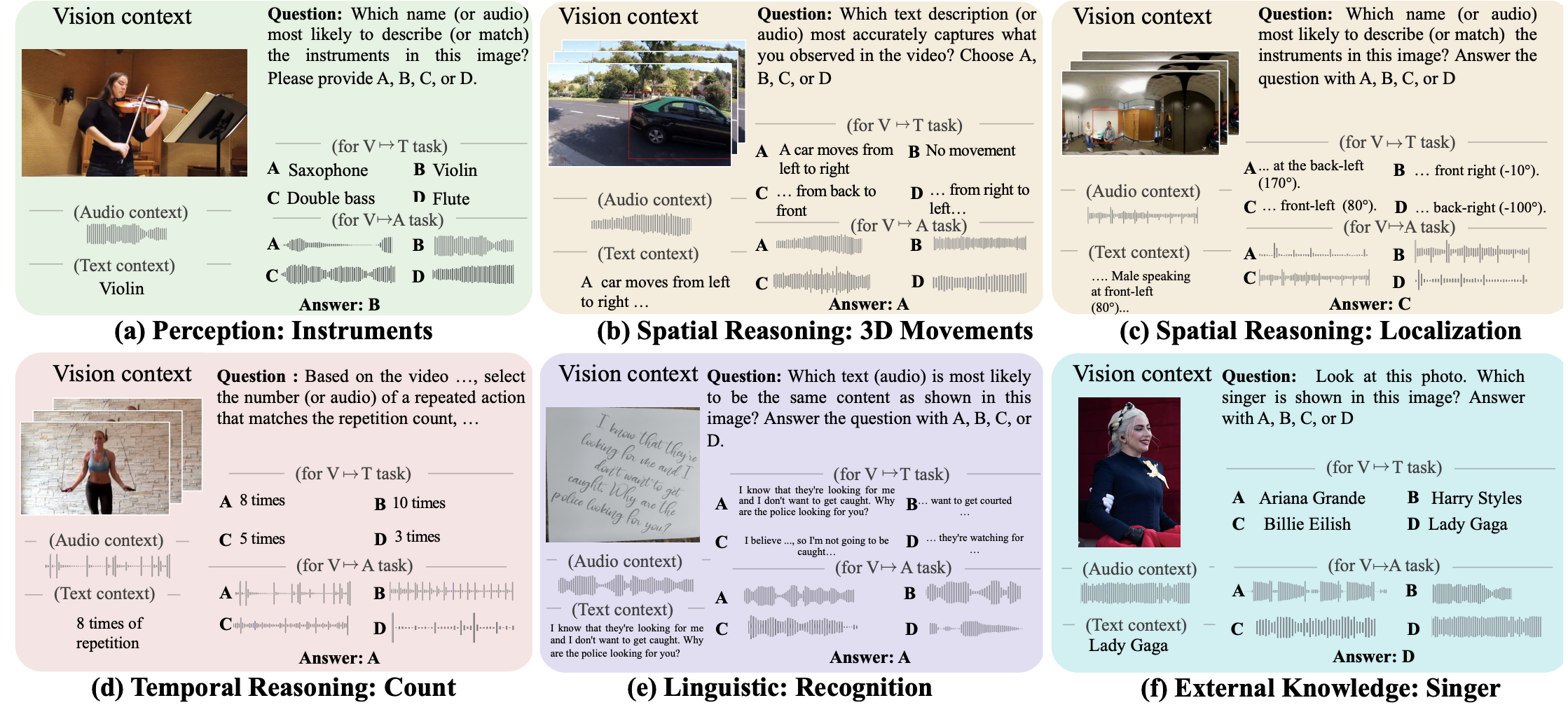

XModBench is a comprehensive benchmark designed to evaluate the cross-modal capabilities and consistency of omni-language models. It systematically assesses model performance across multiple modalities (text, vision, audio) and various cognitive tasks, revealing critical gaps in current state-of-the-art models.

Key Features

- 🎯 Multi-Modal Evaluation: Comprehensive testing across text, vision, and audio modalities

- 🧩 5 Task Dimensions: Perception, Spatial, Temporal, Linguistic, and Knowledge tasks

- 📊 13 SOTA Models Evaluated: Including Gemini 2.5 Pro, Qwen2.5-Omni, EchoInk-R1, and more

- 🔄 Consistency Analysis: Measures performance stability across different modal configurations

- 👥 Human Performance Baseline: Establishes human-level benchmarks for comparison

🚀 Quick Start

Installation

# Clone the repository

git clone https://github.com/XingruiWang/XModBench.git

cd XModBench

# Install dependencies

pip install -r requirements.txt

📂 Dataset Structure

Download and Setup

After cloning from HuggingFace, you'll need to extract the data:

# Download the dataset from HuggingFace

git clone https://huggingface.co/datasets/RyanWW/XModBench

cd XModBench

# Extract the Data.zip file

unzip Data.zip

# Now you have the following structure:

Directory Structure

XModBench/

├── Data/ # Unzipped from Data.zip

│ ├── landscape_audiobench/ # Nature sound scenes

│ ├── emotions/ # Emotion classification data

│ ├── solos_processed/ # Musical instrument solos

│ ├── gtzan-dataset-music-genre-classification/ # Music genre data

│ ├── singers_data_processed/ # Singer identification

│ ├── temporal_audiobench/ # Temporal reasoning tasks

│ ├── urbansas_samples_videos_filtered/ # Urban 3D movements

│ ├── STARSS23_processed_augmented/ # Spatial audio panorama

│ ├── vggss_audio_bench/ # Fine-grained audio-visual

│ ├── URMP_processed/ # Musical instrument arrangements

│ ├── ExtremCountAV/ # Counting tasks

│ ├── posters/ # Movie posters

│ └── trailer_clips/ # Movie trailers

│

└── tasks/ # Task configurations (ready to use)

├── 01_perception/ # Perception tasks

│ ├── finegrained/ # Fine-grained recognition

│ ├── natures/ # Nature scenes

│ ├── instruments/ # Musical instruments

│ ├── instruments_comp/ # Instrument compositions

│ └── general_activities/ # General activities

├── 02_spatial/ # Spatial reasoning tasks

│ ├── 3D_movements/ # 3D movement tracking

│ ├── panaroma/ # Panoramic spatial audio

│ └── arrangements/ # Spatial arrangements

├── 03_speech/ # Speech and language tasks

│ ├── recognition/ # Speech recognition

│ └── translation/ # Translation

├── 04_temporal/ # Temporal reasoning tasks

│ ├── count/ # Temporal counting

│ ├── order/ # Temporal ordering

│ └── calculation/ # Temporal calculations

└── 05_Exteral/ # Additional classification tasks

├── emotion_classification/ # Emotion recognition

├── music_genre_classification/ # Music genre

├── singer_identification/ # Singer identification

└── movie_matching/ # Movie matching

Note: All file paths in the task JSON files use relative paths (./benchmark/Data/...), so ensure your working directory is set correctly when running evaluations.

Basic Usage

#!/bin/bash

#SBATCH --job-name=VLM_eval

#SBATCH --output=log/job_%j.out

#SBATCH --error=log/job_%j.log

#SBATCH --ntasks-per-node=1

#SBATCH --gpus-per-node=4

echo "Running on host: $(hostname)"

echo "CUDA_VISIBLE_DEVICES=$CUDA_VISIBLE_DEVICES"

module load conda

# conda activate vlm

conda activate omni

export audioBench='/home/xwang378/scratch/2025/AudioBench'

# python $audioBench/scripts/run.py \

# --model gemini \

# --task_name perception/vggss_audio_vision \

# --sample 1000

# python $audioBench/scripts/run.py \

# --model gemini \

# --task_name perception/vggss_vision_audio \

# --sample 1000

# python $audioBench/scripts/run.py \

# --model gemini \

# --task_name perception/vggss_vision_text \

# --sample 1000

# python $audioBench/scripts/run.py \

# --model gemini \

# --task_name perception/vggss_audio_text \

# --sample 1000

# Qwen2.5-Omni

# python $audioBench/scripts/run.py \

# --model qwen2.5_omni \

# --task_name perception/vggss_audio_text \

# --sample 1000

python $audioBench/scripts/run.py \

--model qwen2.5_omni \

--task_name perception/vggss_vision_text \

--sample 1000

📈 Benchmark Results

Overall Performance Comparison

| Model | Perception | Spatial | Temporal | Linguistic | Knowledge | Average |

|---|---|---|---|---|---|---|

| Gemini 2.5 Pro | 75.9% | 50.1% | 60.8% | 76.8% | 89.3% | 70.6% |

| Human Performance | 91.0% | 89.7% | 88.9% | 93.9% | 93.9% | 91.5% |

Key Findings

1️⃣ Task Competence Gaps

- Strong Performance: Perception and linguistic tasks (~75% for best models)

- Weak Performance: Spatial (50.1%) and temporal reasoning (60.8%)

- Performance Drop: 15-25 points decrease in spatial/temporal vs. perception tasks

2️⃣ Modality Disparity

- Audio vs. Text: 20-49 point performance drop

- Audio vs. Vision: 33-point average gap

- Vision vs. Text: ~15-point disparity

- Consistency: Best models show 10-12 point standard deviation

3️⃣ Directional Imbalance

- Vision↔Text: 9-17 point gaps between directions

- Audio↔Text: 6-8 point asymmetries

- Root Cause: Training data imbalance favoring image-to-text over inverse directions

📝 Citation

If you use XModBench in your research, please cite our paper:

@article{wang2024xmodbench,

title={XModBench: Benchmarking Cross-Modal Capabilities and Consistency in Omni-Language Models},

author={Wang, Xingrui, etc.},

journal={arXiv preprint arXiv:2510.15148},

year={2024}

}

📄 License

This project is licensed under the MIT License - see the LICENSE file for details.

🙏 Acknowledgments

We thank all contributors and the research community for their valuable feedback and suggestions.

📧 Contact

- Project Lead: Xingrui Wang

- Email: [xwang378@jh.edu]

- Website: https://xingruiwang.github.io/projects/XModBench/

🔗 Links

Todo

- Release Huggingface data

- Release data processing code

- Release data evaluation code

Note: XModBench is actively maintained and regularly updated with new models and evaluation metrics. For the latest updates, please check our releases page.

- Downloads last month

- 162